Speed matters. Over and over research shows that when the speed of a website increases, the activity of users increase. Even if the changes are small, like a half second, and users are perhaps not conscious about it, users will view the overall experience as better. Besides website popularity, high-speed websites are also capable of handling more traffic with the same resources as slower websites. Or you can save resources with speedy sites as less is needed to cater them.

Choosing the fastest web server is a vital decision for any software architect, as the web server is often the heart of any web system architecture. Surprisingly there where no recent and complete benchmarks available online about this issue, so we decided to run our own.

We tested the following web servers and reverse proxies:

- Apache 2.2 (both prefork, thread and event versions)

- Nginx 1.1

- Lighttpd 1.4

- Varnish 3.0

- Squid 3.1

The test server was a virtual machine with 1 GB of RAM and 1 CPU core , running on a KVM host. The virtual server hosted a fresh install of SUSE 11 SP2 with Linux kernel 3.0.42-0.7.3.x86_64. Benchmarks where run from the host machine and over a virtio network device, simulating a gigabit local network.

We did not include application servers or databases, as that would add so much variables and complexity. Instead we only tested the simplest posible scenario, which is delivering static files, like html pages, css-, javascript- and image files. The servers where ran almost with default configurations as they come packaged, we only did some minor tweaking like e.g. turned logging off.

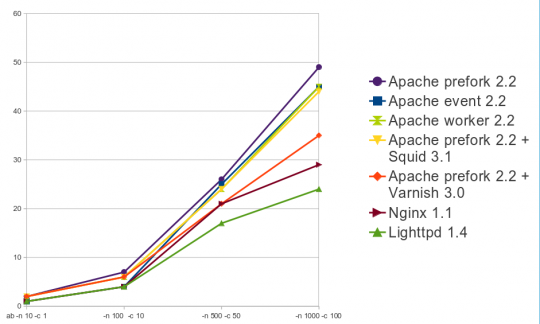

Speed of indivudual requests

First we tested the speed of individual requests using Apache Bench, for example

ab -c 1 -n 10 http://example.com/index.html

The option -c stands for concurrent connections and -n stands for total of requests.

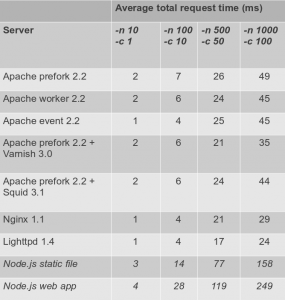

The result was average total request speed, in milliseconds. This represents how fast a random visitor will get the files from the web server. First measurement was for a low load situation, then we ramped up the amount of simulated simultaneous users up to a hundred.

Quite surprisingly, the result was that there are no big differences as all servers performed within 10% of each other. The two fastest ones were Nginx and Lighttpd.

All of the server were really fast in delivering static files. In comparison the table also includes figures on how long it takes for a Node.js app to either deliver a static page or to deliver a dynamic page loaded from a database. The time is significantly slower, even though Node.js is the fastest app server there is.

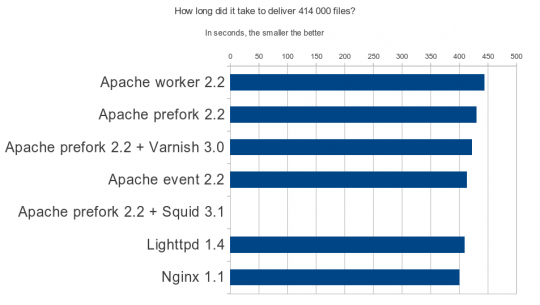

Speed while handling massive traffic

In the second scenario we measured how long it takes for the servers to cope with a huge amount of requests. To simulate the traffic of a real website, we used wget to mirror a static version of a real website we’ve made, the listed the urls and ran Siege so that is requested all the 400+ files with 1000 concurrent connections, amounting to 400 000+ static page and file requests.

The command was:

siege -b -c 1000 -r 403 --file=urls.txt

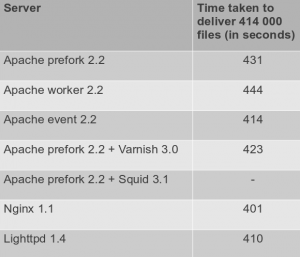

Again, the results showed that the servers perform pretty much the same, while Nginx and Lighttpd are just slightly better than the rest.

For Squid there is no result, since there were too many failed requests. This level of traffic was obviously too much for Squid.

While running these tests we also observed the CPU, memory and network usage. For Nginx and Lighttpd the CPU usage was 40-50%, while for all other servers CPU usage was 70-90%. The biggest memory consumption was while running Apache prefork with 328 MB RAM, while the lowest was for Apache threaded with 237 MB RAM. All servers had a network output of 100+ MB/s, except for Squid which was a little bit under.

Conclusion

The benchmark shows that Lighttpd and Nginx are slightly faster than others, and in CPU terms significantly better. The decision between these do must be based on other factors however, like e.g. an Ohloh comparison. Since Nginx seems to have more development activity, more features, a lot of original and third party documentation, it seems to be a better recommendation. Indeed, Nginx is today the third most popular web server in the world.

Original presentation in Finnish

Extra tips

Benchmark the network interface speed

During the first benchmark all servers performed the same. We found out that the reason was that the network interface was too slow, so we changed the virtual device to model virtio. If you want to benchmark the network interface speed, you can run on the server (to listen for incoming benchmark):

iperf -s -w 65536 -p 12345 -I 5

and the initiate the benchmark from the client with:

iperf -c 192.168.122.131 -w 65536 -p 12345 -t 60 -d

Increase socket limit on Linux

On some machines benchmarking for 1000 simulatenous connections is capped by the kernel, as there are not enough sockets available. To check the amout limit run:

ulimit -n

and to increase it run e.g.

ulimit -n 2000

Why did you choose to benchmark a server with only one vCPU? Nginx should show a much better performance if you benchmark it on a SMP host. Web servers today are rarely single-CPU machines.

Why did you choose to benchmark using only a single load generator host?

Have you considered benchmarking Cherokee or G-WAN?

And please share full web server configurations used with benchmarks in your reports next time.

Where’s LSWS server in the equation?

It beat the SH!7 out of them all!

might be nice on the graphs to say “smaller is better” or what not…